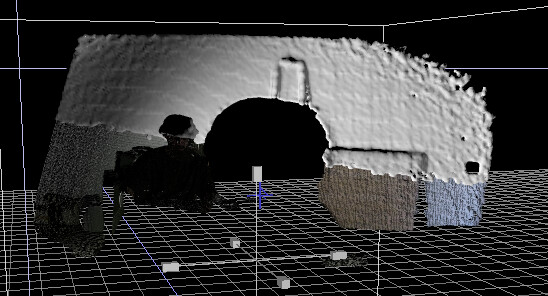

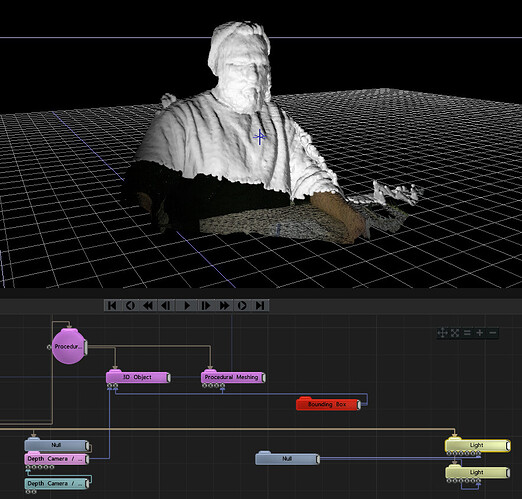

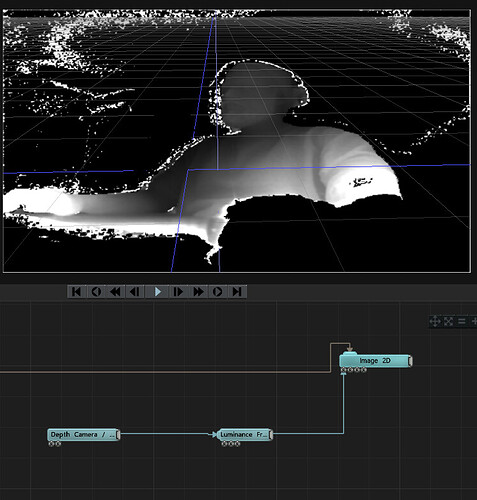

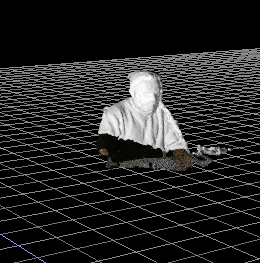

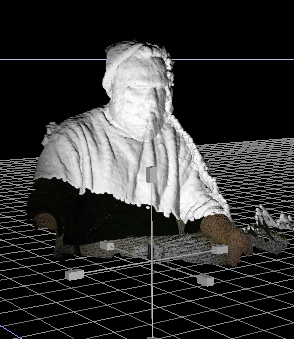

I keep having this strange problem - when I try to use a kinect mesh to make a procedural mesh I get this weird cutoff line even though the bounding box covers the kinect mesh and goes well beyond its bounds.

You can se there is a line going across my chest and hand and you can see the kinect mesh underneath.

Also worth mentioning is that the Kinect mesh is parented to a null and is slightly rotated so the line looks diagonal, but if i leave the kinect mesh rotation transforms at 0.0.0 the line is perfectly horizontal. Another thing worth mentioning is that if I move the Kinect mesh on the Y axis the procedural mesh follows the kinect mesh and the line does not shift on my body - the procedural root node and all it’s children do not move with the Kinect mesh so it is not as if i am also translating the bounding box as a child of the kinect mesh.

Also tried to see if it works in different scales and the line rescales to the same position.

I tried it on Kinect 2 and Azure Kinect and always have this bug.

Edit 1: Bumping up the mesh resolution scale to 1.3 in the kinect mesh attributes seems to fix the problem , but if bump up the far clip plane to reveal the back wall i get weird artefacts and the line shifts even higher so I end up bumping the Mesh resolution scale even higher. Playing with the kinect depth scale on the depth camera node shifts the line higher or lower depending on how much i go up or down from the original kinect depth scale value