Hi! Is the any solution to make camera fade-in\fade out? I suppose, that this function must be implemented in camera node, around Far\Near clipping, but unfortunately I didn’t find that. Maybe there is some tricky way to achieve this? All I need just smooth fade object from a camera from alpha 0 to alpha 100 depending of distance from camera to object.

Not entirely sure if I understand what you want to achieve. A camera can only be active or inactive, so the camera itself cannot fade. However, you can use a full-screen black Image2D and fade that up and down as needed.

If you want to smoothly fade in and out objects, you want to look at alpha blending, or render layers. These both come with their own limitations, but its difficult to suggest anything from what we’ve got there - could you be more specific, or offer some visual references?

He talks about smooth transparency(fade in fade out) for 3d objects. Very often i need to smoothly change the transparency of 3d objects. Unfortunately this is possible only with alpha-blend materials but alpha-blend material does not work correctly with z-depth lighting etc. Any 3d modeling apps can do this but notch can’t

Alpha-blend and z-depth is another big problem. Try to clone 3d objects or image plane with apha-blend and you get incorrect overlap. Alpha to coverage - is not a solution because it doest allow to get smooth alpha.

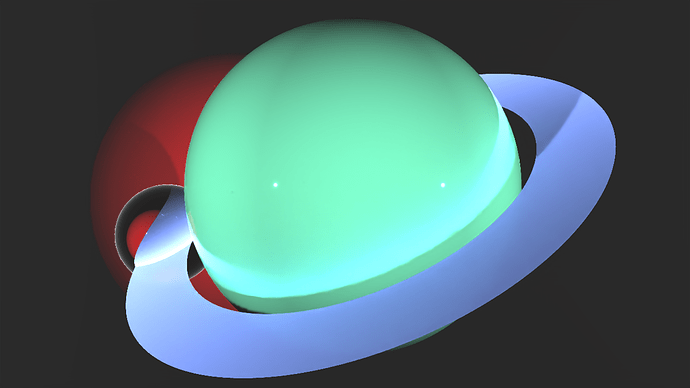

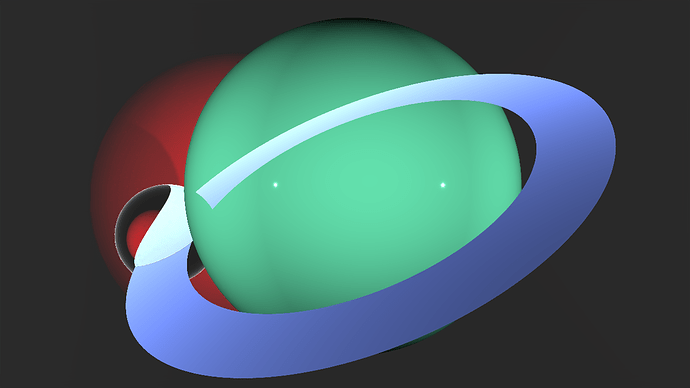

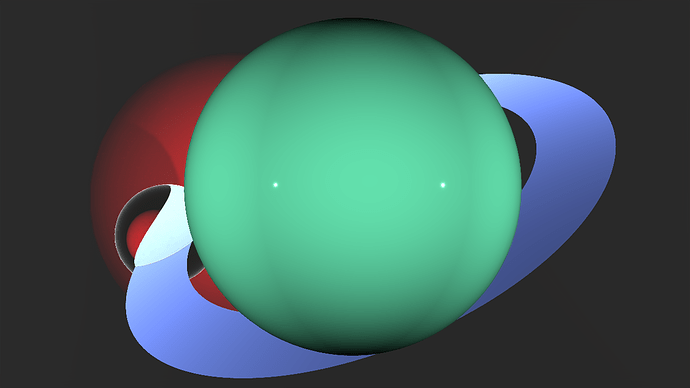

This explained a lot of issues I’ve had with alpha blending! Looking more into it, there is something funky going on with the alpha rendering/object ordering. Fastest way to check it is by having two overlapping objects first set to being opaque.

.Swapping the materials for the green sphere and the ring from opaque to Alpha Blended, with an Alpha value of 1, the expected behaviour would be to get the same image. However, now the two objects seems to be rendered separately, and then alpha blended on top of each other according to the nodegraph position.

The Glass(RT) sphere seems to behave as expected.

Due to the nature of different alpha blending applications it would be beneficial to get a clarification on how to approach this. The Alpha To Coverage option does behave as expected for an alpha value of 1, but does not adjust the alpha blend apart from turning the object transparent at values <0.2.

I’ll try and separate this response out, as there are a number of different things we are talking about now and it think we’ve gotten derailed a bit.

@berezyuk.alex I’m still curious in what you are looking to do, so if you could follow up with more information I’d be happy to take a look at the scene and give some advice. If this has post become a bit messy, feel free to contact me in support. https://www.notch.one/support/contact-us/

@joziks Alpha transparency and z-depth is a pretty long running issue in the real-time rasterised graphics world, so I’m curious what your referring to in regards to other tools. If you could provide some examples in a seperate forum post, I’d be interested to see how other tools have navigated the problem. (just don’t want to be answering 3 different people in one thread  )

)

@martinus Those issues look pretty standard for alpha sorting and z-depth. Basically, alpha blended objects don’t write to the depth buffer, and so have no way of knowing which part of their surface is in front of another surface. For simple cases, the distance of the object origin to the camera can be used, but when you have interlocking surfaces (like the ring, being both in front of and behind the sphere) you will get erroneous results such as above.

Glass RT materials are fine because they do actually write to the zbuffer - with raytracing, rays can simply pass through them and continue to draw whatever was behind them.

Yes! Now this is a big problem, I often clone images or 3d objects with an alpha channel(alpha-blended) and this cannot be done right due to incorrect interpretation of z-depth. perhaps the developers will be able to answer us what is the difficulty ito fix this bug.

As @ryan.barth mentioned, please provide some examples of how you’ve solved the issues you’re referring to in other real-time rendering tools (in a new thread please, so as not to complicate the issue from OP), as they do not come across as “bugs” or “incorrect interpretation of z-depth”, but rather standard limitations of real-time rendering without ray tracing.