Hello

I want to use a Kinect Azure on an external computer and stream it into notch.

So far, I have a gotten TouchDesigner to take the color and black and white depth image and send them out - I can get them into notch and plug the Color to the Colour Source and depth to the depth source. I have some success though its not great at being able to use the Preview in Viewport checkbox and can see them mixing together. I am able to mess with the Clip Plane to some degree… but I am not getting it to show up in the Depth Camera/Kinect Mesh render. Does the Depth image need specific attributes? Any tips on how to best combine color and depth from external source into the Depth Camera/Kinect Mesh?

Any sample images? Can you extract the depth image from a Kinect stream inside of notch and send it external from one notch computer to another notch computer? Trying to stream the images across the internet and recombine on the other side.

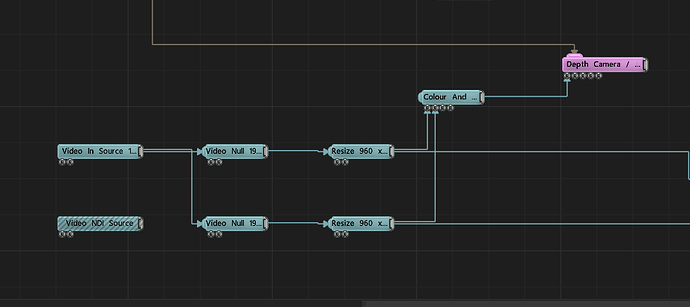

Heres my nodes - comes in as one image, gets split to two. Am I missing something with connecting the colour and depth mesh?

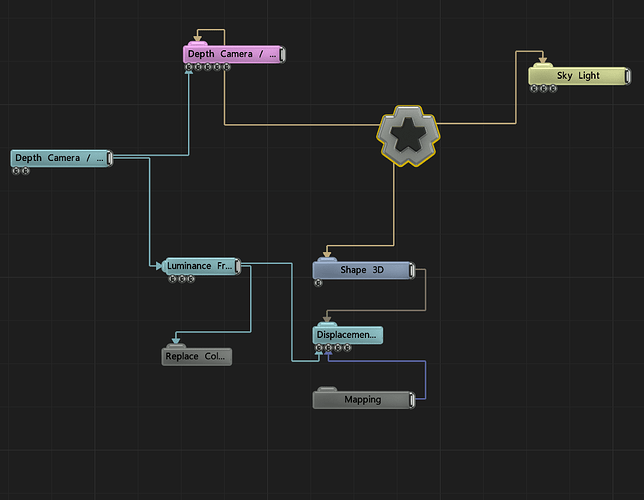

It looks like the Colour and Depth Source node has a problem with the depth camera mesh - for now I would suggest going through a Shape 3D plane and displacement node.

Thanks, Ryan

Hi Ryan,

I’m having issues with ‘Colour and Depth Source’ as well. has something changed - or will change in the short term - about the functionality of this node?

I need to edit the depth map with some post-FX nodes and pipe it back to geometry. I had no fortune in making ‘Kinect mesh’ to recognize the edited depth map, so - as you suggested - I used a shape 3d plane and a displacement node, but the result is kind of awfully quantized. What am I missing?

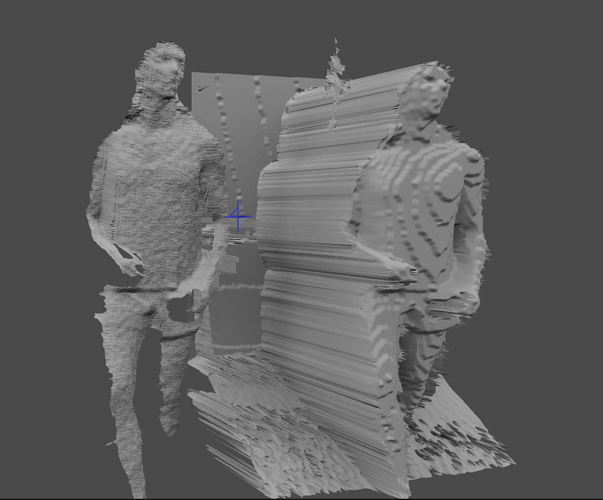

Here I attach my setup and a comparison between Kinect mesh (on the left) and displaced plane (on the right)

This question was handled directly through support - to summarise, the Depth image outputs an 8bit depth image, and that’s why the results look stepped in comparison. We may improve this in future though.